Why is everyone forcing AI on us?

As a developer who’s spent the last few years knee-deep in social media apps, I’ve watched artificial intelligence go from a buzzword to the backbone of modern app development. After Nvidia’s stock went through the roof, and the whole AI arms race that is still happening as of today, it feels like every app hurries to slap “AI-powered” on their feature list. But here’s the thing: not all AI is equal, and not all of it is welcome.

Some AI features genuinely make our lives easier: often in ways we barely notice. Others, though, are forced on us, and as both a developer and a user, I find that trend worrying. In this post, I want to share my perspective on why forced AI features are problematic, why background AI can be a quiet hero, and how we’re trying to get it right with WHY, the open-source, user-owned social media app I help build.

Why do I have a problem with AI

This year I've been involved in multiple small scale projects. We obviously needed a Project management app, like Jira or Azure DevOps. I honestly love them, great tools, maybe even necessary for a dev team. So on one project we decided to use ClickUp: free (for our purposes), open-source. Sounds good, right? Well, my honest opinion: bloated. From the moment you create a task board, to the moment you create a task, you are prompted with a lot of AI powered tools to help you achieve... whatever you need to achieve. Like, ok, useful, but why is this the default?

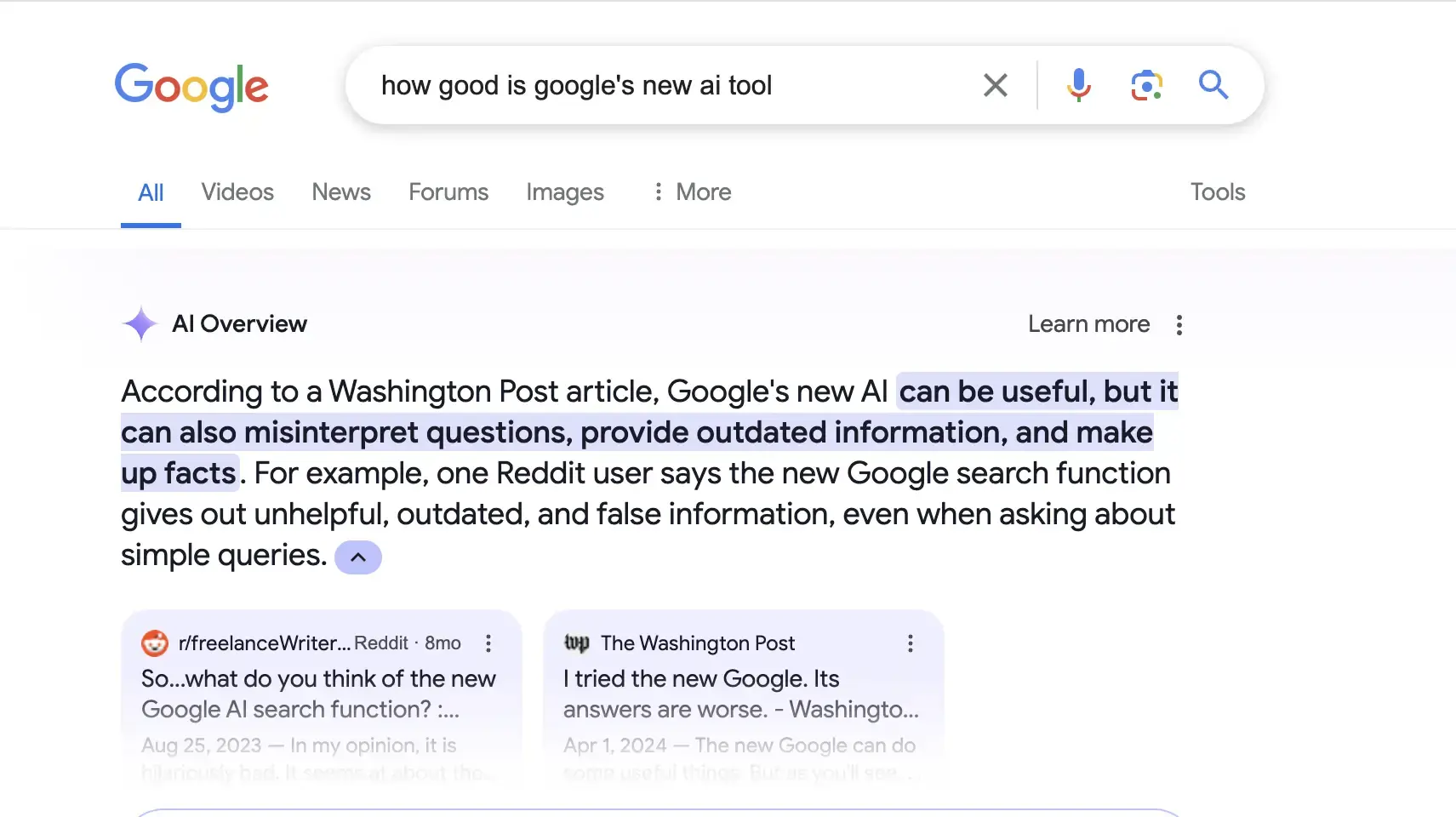

Another popular example is, literally Google. Did you see anything changed on google search? We sure did.

Let’s be real: nobody likes having features shoved down their throat, especially when it comes to AI. I’ve seen a wave of frustration on social media and in dev forums about this. One post that stuck with me was from @windcomecalling: “I literally cannot turn off the dogshit ‘AI summary’ at the top of my search results in the Google search app on my phone.” I’ve felt that pain myself: opting out, only to have the feature pop up anyway.

It’s also not just about annoyance, either. There are real risks. Remember the Air Canada chatbot? Their AI gave out wrong info about bereavement fares, and the company ended up in court. Your AI makes a mistake, and suddenly you’re liable.

Also, privacy related, I know how tempting it is to collect more data “for personalization”. But as a user, I get why people are worried. Forced AI features often mean more data collection, less transparency, and a creeping sense that you’re not really in control. I’ve seen users on X vent about this, especially when platforms like Facebook or X roll out new AI tools without any real opt-out.

However, there can be good development

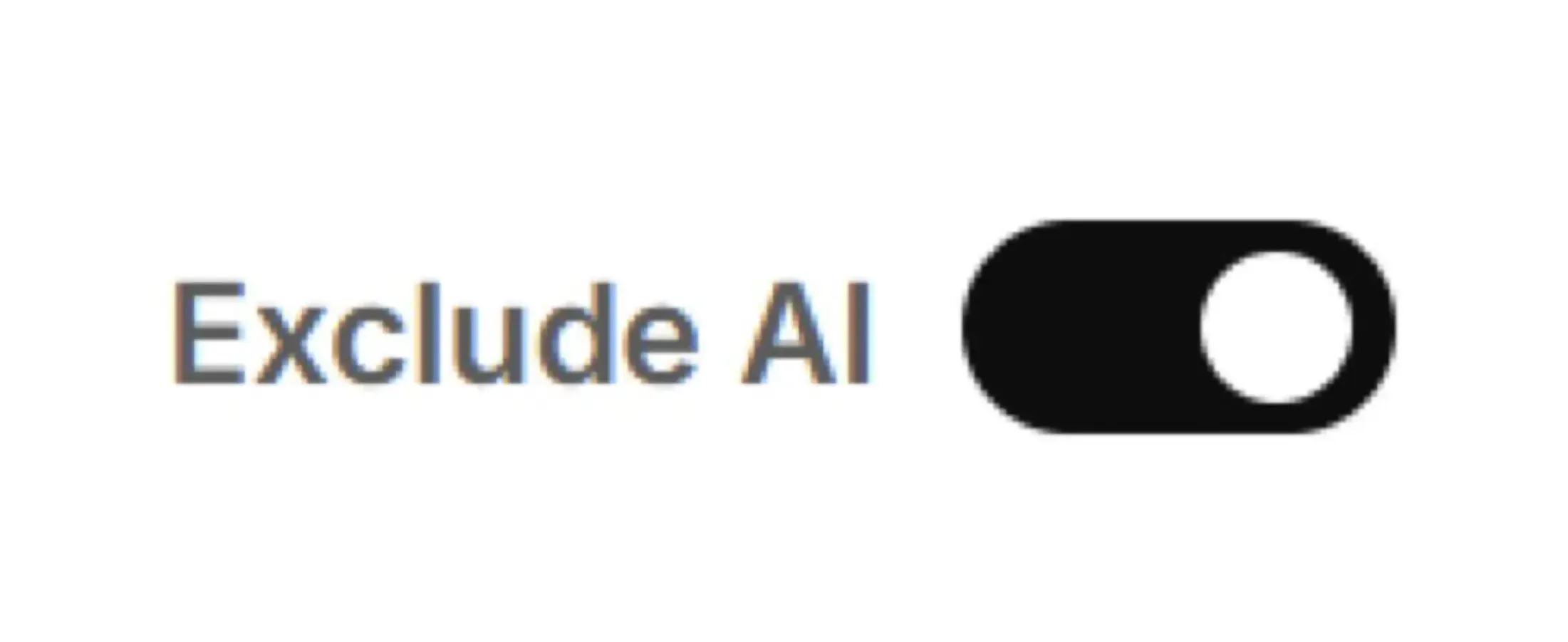

This week I've been scrolling on X. Stumbled upon this post (quote to the original).

It felt so weird to see this button. Why did we end up on this timeline where AI is literally forced on us. When did “Exclude AI” become a feature, instead of the default? Why and when we’ve accepted that AI is everywhere, and now we have to fight for the right to experience the internet without it.

What’s even weirder is how rare this button is. Why doesn’t anyone else do this? Why is it so hard to find a simple, honest way to say, “No thanks, I don’t want AI involved in this part of my experience”? It’s like the industry is moving so fast to bake AI into everything that user choice is an afterthought.

As a developer, this really hit home for me. It’s a reminder that every time we add a new feature AI-related, we need to ask: Are we giving users real control? Or are we just assuming they’ll go along with whatever we build? That’s why, on WHY, we’re thinking hard about consent and transparency. If we ever add an “Exclude AI” button, it won’t be an afterthought or a band-aid, it’ll be a core part of how we respect our users’ choices from the start.

Positive Uses of AI in the Background

But here’s the flip side: when AI is done right, it can be amazing, and almost invisible.

Email providers often use AI in order to filter out spam. Just imagine a world where you open up your inbox and see 200 spam emails just sitting there. That is not happening, as this filtering makes a spam operation cost a few times more than normal. So thanks a lot AI for this one.

Live translation. I don't even know if I should explain this one, but the way AI helps people talk with no language barrier is nice. Same thing applies for any accesibility app, like the ones for visual impairment (text-to-speech, object recognition).

Personalisation may also be an example, depending on how it's done. Services like Spotify and Netflix use some collected data to recommend you music, tv shows and movies, and it works! We at WHY are not so keen on using user data, so we will just leave this example here. It's not AI thrown in your face, it works great and serves it purpose. You decide if it's good or bad.

And then there's the actual AI made for users, like Chatbots. No comment on this, you probably already tried ChatGPT, Gemini, Claude, Grok or any other app like that.

But WHY do we get it forced upon us?

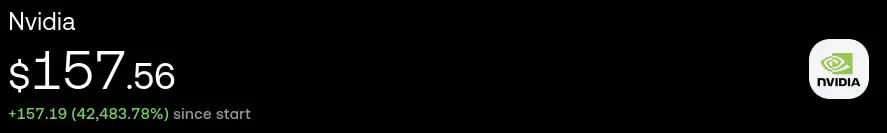

Well, from my perspective, money. The Nvidia stock (as they make the chips needed for training AI models) says everything for me.

424853% increase? There it is, a lot of money!

After Nvidia’s stock went through the roof and every tech headline started screaming about the “AI revolution,” it’s like companies are terrified of being left behind. But somewhere along the way, it feels like they stopped asking if these features actually help us, or if we even want them.

From my perspective, a lot of this is about image and competition. Companies want to look cutting-edge, so they rush to add AI-powered summaries, chatbots, or recommendations, even if those features aren’t fully baked.

But I feel like most of the times, it's just stupid features made to brag about, like now there’s an “AI assistant” popping up everywhere, whether I need it or not. Why would I talk to an AI assistant when I buy a new phone, especially when all the responses I get is "As an AI language model, I cannot perform...".

It’s like they’re more interested in ticking the “AI” box for investors and press releases than actually improving the product for real people. Honestly, it’s frustrating. I love what AI can do when it’s used thoughtfully, but I wish more companies would slow down and think about the user experience. Forced AI features make me feel like I’m losing control over the apps I rely on every day. I just want tech that respects my choices and actually makes my life easier, not more complicated.

How WHY Handles AI

This brings me to back WHY, this specific social media app. We built WHY because we were tired of the big platforms forcing features (and ads, and censorship) on users. Our philosophy is simple: users should be in control, not the other way around. All our code is open-source and up on GitHub. If we use AI, you can see exactly how and why. AI will help us in the background, but we’re careful to keep it non-intrusive and privacy-respecting. If we ever add a new AI feature, it will be an opt-in, rarely an opt-out.. We’re also making everything GDPR-compliant, which means we take data rights seriously. Our community helps keep us honest: if someone spots a problem, call us out, we fix it out in the open. That’s the beauty of open source.

As AI keeps evolving, I think it’s more important than ever for developers to listen to users. Forced AI features might look good in a product demo, but they erode trust and can even land you in legal hot water. At WHY, we’re trying to set a new standard: AI that empowers, not imposes. If you’re building apps, I hope you’ll join us in putting users first. And if you’re a user, keep demanding transparency and control. That’s how we make sure technology serves us, not the other way around.

Thanks for reading! If you want to see how we’re doing things differently, check out our GitHub or join the conversation in our community. Let’s build a better, more user-friendly future for AI in apps, together.